Mapping the Future: The Rise of State-Level AI Regulation in the United States

- February 29, 2024

- Snippets

Practices & Technologies

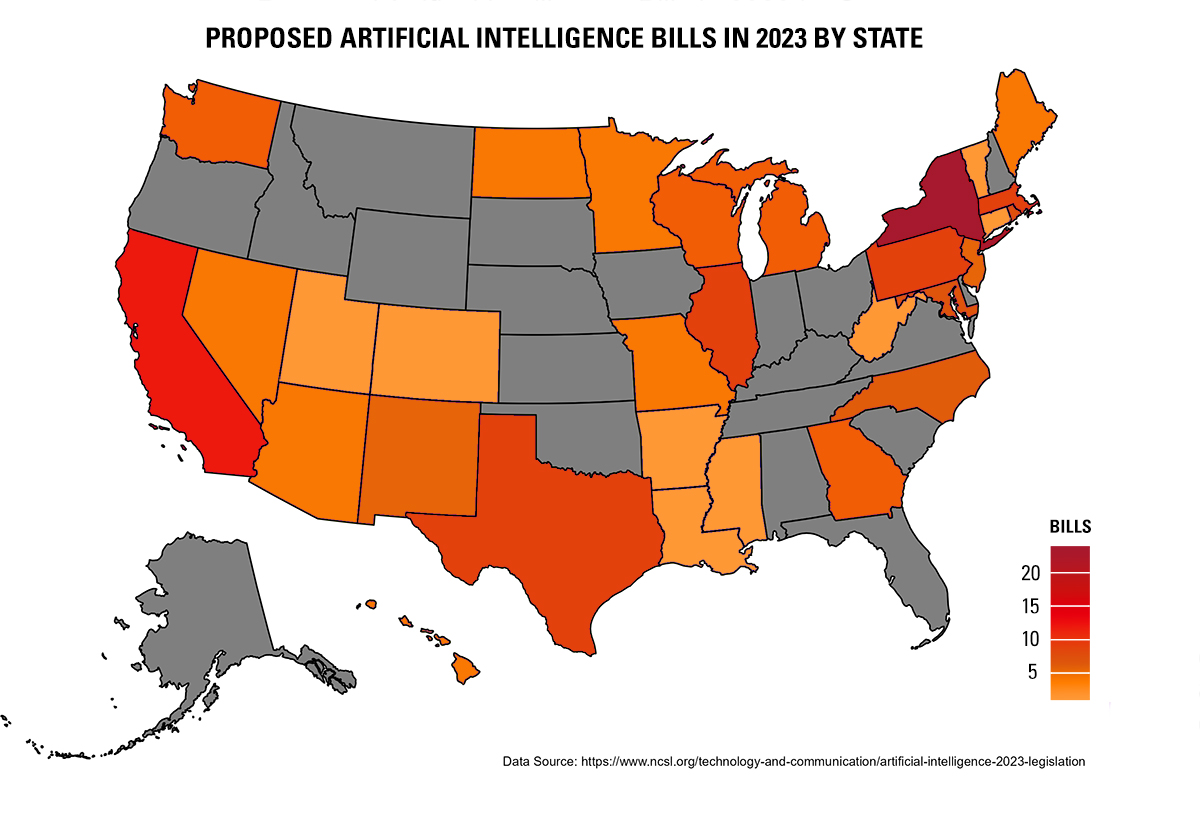

Artificial IntelligenceCurrently in the United States, few state-based regulations specifically target machine learning and artificial intelligence (AI) models. However, the recent rise of powerful generative AI programs like ChatGPT, Dall-E, Stable Diffusion and Sora, has left state legislatures scrambling to determine the regulatory principles under which such AI models should operate. A landscape with increased AI regulation is almost certainly on the horizon, though the approaches undertaken by each state may vary. This article summarizes statewide regulatory trends in the U.S., including Washington D.C. and Puerto Rico, as assessed by National Conference of State Legislatures at the end of the 2023 legislative session (link). In total, the legislatures of 30 states, Washington D.C. and Puerto Rico proposed over 100 bills directed to AI combined. Moreover, 17 states and Puerto Rico enacted or adopted at least one bill related to AI. Based on our review of the proposed legislation, the varied state-based regulatory strategies can be binned into four main groups: AI studies, substantive regulation of AI usage, AI anti-discrimination safeguards and AI disclosure/transparency.

The first grouping of legislative efforts involves the study of AI to understand its effects within various states. Forty bills across the legislatures of 16 states and Puerto Rico involve the creation of a department, office or commission to study the impacts of AI or direct state officials to create inventories of AI tools. Some of these AI studies focus on specific industries, such as banking or housing, while other bills intend to understand the broader impact of AI, such as on employment within the state. One recently-enacted AI study is H 3563 from Illinois. H 3563 amends the Department of Innovation and Technology Act and creates a “Generative AI and Natural Language Processing Task Force,” which will include members of the Illinois House of Representatives, Illinois Senate, educators, “experts in cybersecurity,” “experts in artificial intelligence” and members of both labor associations and business associations. The bill charges the Task Force with making recommendations to the General Assembly regarding regulations on generative AI in areas including consumer protection, education, public services, civil rights and liberties, employment and cybersecurity. This bill and similar legislation in other states appear to indicate an interest in understanding how to mitigate potential AI-based harms (e.g., dissemination of consumer information, AI job displacement and AI disinformation) while assessing beneficial AI effects for states (e.g., improved delivery of education and other public services to citizens).

The second set of bills involve the substantive regulation of AI. Thirty-eight bills across the legislatures of 13 states and Washington D.C. involve efforts to limit the reach and/or specific effect of AI tools. These bills vary in scope from targeting specific industries (e.g., mental health, physical health, banking, housing or film) to regulating the use of AI by organizations above a certain size. In general, these bills restrict the use of AI tools without sufficient human oversight or regulate the conditions under which an AI tool can be applied to specific applications. One such recently enacted bill is Connecticut S 1103. This bill prevents state agencies from implementing systems that use AI without first determining that the system will not result in “unlawful discrimination” or “unlawful disparate impact” based on age, ethnicity, religion, race, sex, gender identity or expression, sexual orientation, genetic information, marital status, pregnancy, or lawful source of income, among other such characteristics. Under the bill, state agencies in Connecticut may not implement “any system that employs artificial intelligence” unless the agency has first performed an assessment to ensure that such an AI-based system will not result in unlawful discrimination or impact. Such regulations are expected to slow the adoption of the latest AI models and may foreshadow the total prohibition of AI models without human oversight in certain contexts (e.g., insurance rate determination or medical diagnosis and treatment).

The third group of AI-based legislative efforts seeks to prohibit the use of AI to specifically promote discrimination. Ten bills across seven states and Washington D.C. seek to prohibit the use of AI tools that could result in discriminatory effects in certain industries, including healthcare, housing and employment. One bill of note in New York is A 843, which is currently pending in the New York Assembly and seeks to prohibit motor vehicle insurers from adjusting any “algorithm or equation” used to determine the cost of coverage based upon factors including, age, marital status, sex, sexual orientation, educational background or “characteristic indicating or tending to indicate the socioeconomic status of an individual.” Such regulations point to the state legislature’s concern with discriminatory, though unintended, impacts resulting from the use of AI models.

The fourth grouping of AI-based regulations mandates disclosure requirements for developers or users of AI models. Eleven bills in four states, seven of which are in New York, require entities (in general or in particular industries, such as state government and healthcare) to disclose to individuals the use of AI tools on that individual’s data. One such bill, currently pending in Pennsylvania, is H 1663. This bill would create a duty of disclosure regarding AI use by medical insurers. Under this duty, insurers must disclose to healthcare providers, people covered by the insurer, and the general public of the insurer’s use of “artificial intelligence-based algorithms” to determine the necessity or efficacy of medical treatments. Additionally, the bill requires algorithmic transparency in that insurers must submit the specific “AI-based algorithms and training data sets that are being used or will be used” to the state Insurance Department of Pennsylvania. Such regulations could trigger greater scrutiny of specific algorithms and require greater AI transparency by both the government and the public.

To conclude, although still in its infancy, state regulation of AI is growing quickly in immediacy and scope. Bills creating groups to make recommendations or present reports to state legislatures generally provide deadlines of late 2024 for such results. This suggests that state regulation of AI may expand in 2025 and beyond. However, the situation is dynamic at least because the rapidly expanding capabilities of AI models could prompt public support for regulation of AI along a much faster timeline.

Article co-written by Yuri Levin-Schwartz, Ph.D., a law clerk at MBHB.